The Curse of AI in the security industry

A few weeks ago I spoke at a Gartner organized CSO summit. Not “my thing”, but one of the lessons from my first start up was that you have to build a business and not a product. Sales is not “my thing”, but a startup without sales, is a technology foley.

The Crash Override north star is to be able to tell users what to work on now, next or never. We are in the relative early stages of laying down long-term foundations, something we always openly explain to people, but I was still a little shocked when after talking to a CSO about what we do, they said, “I want to know how you plan to use AI to tell me what to work on now”.

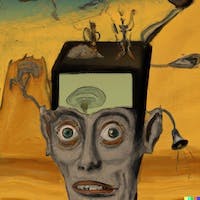

I was taken aback at the sheer hype that AI now has in our industry. It seems that if you don't have it, you are now considered ‘legacy’. I was also, on reflection, disappointed that a potential customer was telling me that we needed to use a particular technology solution, before understanding how deeply we already understood the problem, and was even interested in hearing about how we planned to solve it.

Delivering a solution, to a gun-shot to the chest security problem, as fast as possible, is in everyones interest, and as long as it's a sane and effective approach, using AI or not doesn't matter. It just doesn't matter. Right tool for the job. It's that bloody simple. That's why we used Nim for Chalk™.

Perhaps I should have just said “ChatGPT” and moved on, bolted on a ChatGPT interface to the product in a few hours on the plane home so we delivered on the promise, and moved on, but that's not our style.

That interaction though, is the curse of AI for the security industry. It's the hype of a hype cycle gone mad.

Term Sheet by Fortune, is a daily report of what companies have been venture funded. A year ago after supply chain attacks were in the mainstream press, supply chain companies were being funded by all the big investors, constantly. It was a few a week, and sometimes a few a day. A year later we are still seeing startups being funded to enter that over-saturated market, but they are mainly the smaller investment firms who couldn't get in on the earlier hot deals, now chasing less interesting companies.

These days Term Sheet is swamped with AI and its nuts.

Here is a few from the last week or so

- UserEvidence, a Jackson Hole, Wyo.-based AI platform that automatically generates social content, case studies, and aggregates customer feedback, raised $9 million in Series A funding. Crosslink Capital led the round and was joined by Founder Collective, Afore, and Next Frontier Capital.

Hmm, customer case studies generated by AI. Interesting.

- Meeno, a San Francisco-based AI-powered app that gives relationship advice to users, raised $3.9 million in seed funding. Sequoia Capital led the round and was joined by AI Fund and NEA.

Relationship advice from an AI. Really ?

Me : Should you take relationship advice from chatgpt ?

ChatGPT : Taking relationship advice from a chatbot like GPT-3 can be risky. While GPT-3 can provide general information and suggestions based on the text data it has been trained on, it lacks the ability to understand the nuances and complexities of individual relationships.

And that's just a few from the constant stream. For your ongoing entertainment, sign up to Term Sheet here.

It was lab grown meat a few months ago. Pretty soon it will be lab grown humans that can use AI to find other AIs to have a relationship with and team up to solve cyber security programs. You heard it here first.

Steve Polyak, an Adjunct Research Professor at the Department of Global Health is quoted as saying

“Before we work on artificial intelligence, why don’t we do something about natural stupidity?”

So how the hell are we going to break the cycle, and avoid stupid technological security solutions being built, to solve problems that don’t need it?

I think hope lies in developer led adoption. Yes, I am biased because that is Crash Overrides go-to-market strategy but if, and yes I accept it is an ‘if’, the CSO above is representative of the security buying journey, then think about this.

A CSO buys tools by being told about a problem, agrees they have it, and that it’s a priority

Asks a security vendor how they use AI to solve it

Based on the answer, decides if it is worth investing time in trying

A developer on the other hand, follows a very different and widely accepted buying journey.

A developers knows (rather than is told) they have a problem

They look for a solution to their problem themselves

They learn about options and frame them against their expected findings

They learn how the options work, and if they would work for their use case

They look for peer validation in the form of cases studies and community recommendations

They decides if it is worth investing time in trying the solution

That is a much more rational way, in my opinion, to decide the best way to solve problems, and the one we are attaching our horse to. It is not an approach that suffers from the curse of AI.

I have no doubt that we will have some AI on our platform before long, but we are going to choose the right tools for the right purpose, and I hope security people will do the same.